Medical Diagnosis: Improving Breast Cancer Detection With A Quantum Neural Network

A Summary in Plain English

My summary of a highly technical article in plain English

In 2020, data scientists from India employed a deep learning neural network to enhance breast cancer detection technology. The scientists began by training their neural network to associate a particular set of data inputs with specific outputs. This supervised learning (as it's known) achieves faster and far more efficient outcomes than unsupervised learning (a deep learning process in which the outcome is unknown).

However, instead of employing a conventional (artificial) neural network (CNN) to achieve this task, the data scientists created a quantum convolutional neural network system (QCNN). With quantum computing in the mix, a QCNN is apt to be far more robust and efficient at analyzing large amounts of data. Combined with supervised learning, it's far more powerful than a CNN.

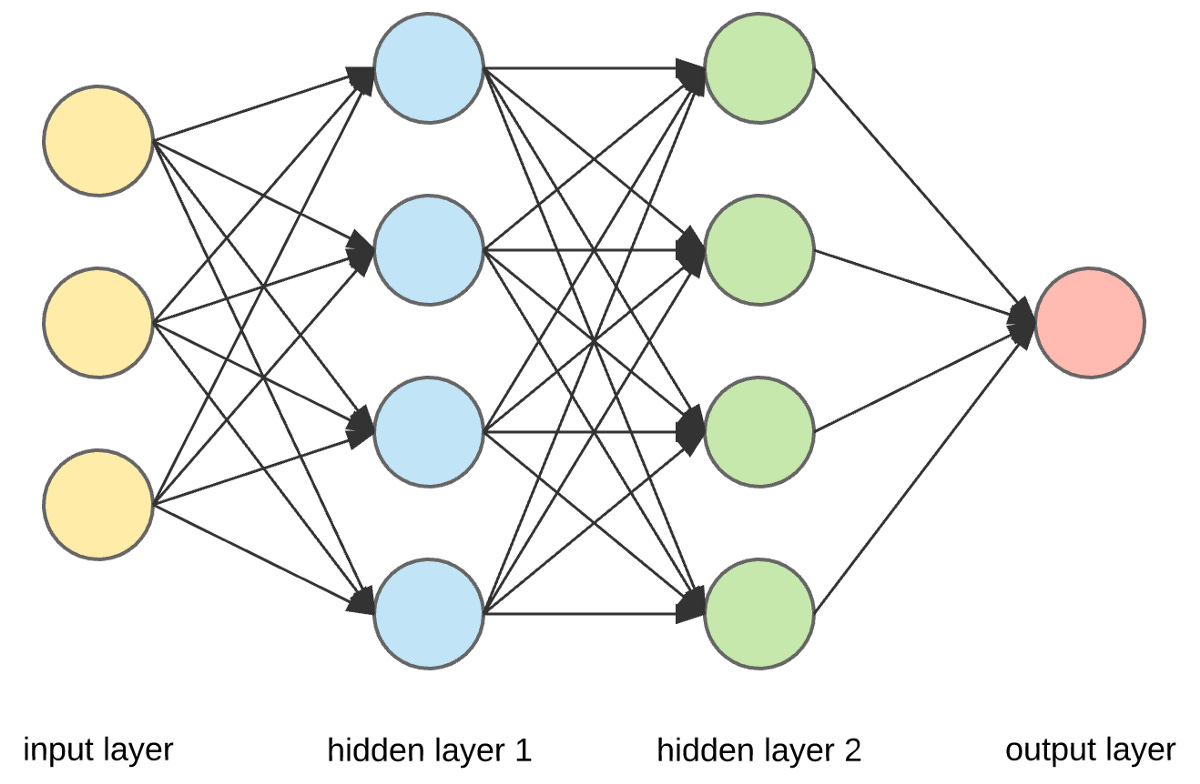

above: a classical neural network

First Steps

The project's scientists initially believed that image recognition was largely the key to breast cancer detection. Consequently, they used a machine learning tool named Kaggle to assign numerical values to each MRI image pixel based on its brightness. These values were then formed into an array and supplied as input to the neural network.

Unfortunately, the scientists discovered that achieving highly predictive results required a large data matrix. Moreover, processing such a matrix would require simulating a 256-qubit system. As a result, the scientists modified their input dataset using a 4 × 4 matrix of image pixels.

This change was did not substantially impact the project because the neural network incorporated supervised learning. In other words, the neural network was trained with deep learning to associate particular data inputs with two specific data outcomes (a cancerous or non-cancerous outcome). In this instance, the training involved inputting a subset of data from over 100 images.

Nonetheless, the scientists still sought to minimize their loss function (the looseness with which an algorithm models a dataset). So they implemented an optimization algorithm like variational quantum eigensolver (VQE). This classical/quantum hybrid algorithm can map a loss function into mathematical terms and thus minimize its properties.

Creating Qubit Rotations

For this project, the scientists implemented their 10-qubit QCNN on IBM's cloud computing platform. After inputting the image data (the 4 × 4 matrix of image pixels), these qubits began acting on the data to perform their magic (in conjunction with the deep learning algorithm).

More precisely, these qubits employ their Z rotation gates and X rotation gates (animation at link) to optimize the QCNN's training parameters. They process data input each initial qubit rotation.

Whereas a CNN limits data input parameters to a single neuronal connection, a QCNN can expand this limit exponentially based on how many qubits are used. Thus, data input can be 5-dimensional in a 5-qubit neuronal network, as each input affects the Y rotation parameter. Thus, the output can be a 5-bit number. Since each qubit is three dimensional, three data input parameters can be used, leading to a 72 dimensional parameter space.

If you can visualize this explanation, you can understand why quantum computing is so powerful. Its reflects the difference between a spherical representation of possible data points and a 2-dimensional representative model.

With these qubits operating, a deep learning algorithm can gradually optimize the minimum deviation between what the input value ultimately predicts (the output label) and what it was supposed to predict (the true label). Once the scientists implemented a deep learning algorithm, the QCNN gave them quicker and more accurate prediction results.

End Result

Relying on a quantum neural network for diagnosis raises concerns about the potential for false positives and negatives. As a result, detection results must entertain specificity, sensitivity, and accuracy as their focus parameters. In this case, the QCNN provides a 99.6% specificity, a 97.7% sensitivity, and a 98.9% accuracy rate. While seemingly impressive, the QCNN's results were not dramatically better than that of a conventional neural network. The QCNN did work exponentially faster, however.